This post demosntrates building scalable resume parsing with Amazon Bedrock Data Automation. Complete IDP guide with CDK, Lambda, S3 integration for automated document IDP using GenAI on AWS.

It’s Monday morning, and your HR team just received 200 resumes for that critical engineering position. Each resume has a different format, layout, and structure. Someone needs to manually extract names, emails, skills, and work experience from each one.

This scenario plays out in organizations everywhere. Manual document processing is slow, prone to errors, and takes valuable time away from more meaningful work like actually evaluating candidates.

The core problem is simple: humans are great at understanding documents, but terrible at processing them at scale. We need a way to automatically extract structured data from unstructured documents.

This is where Intelligent Document Processing (IDP) comes in. IDP uses AI to read documents and extract the information you need, turning messy PDFs into clean, structured data.

In this post, we’ll build a system that automatically processes resumes. When someone uploads a PDF, our system will extract the key information and save it as structured JSON data. We’ll use Amazon Bedrock Data Automation to handle the AI processing, so we don’t need to manage models or write complex extraction logic.

Table of Contents

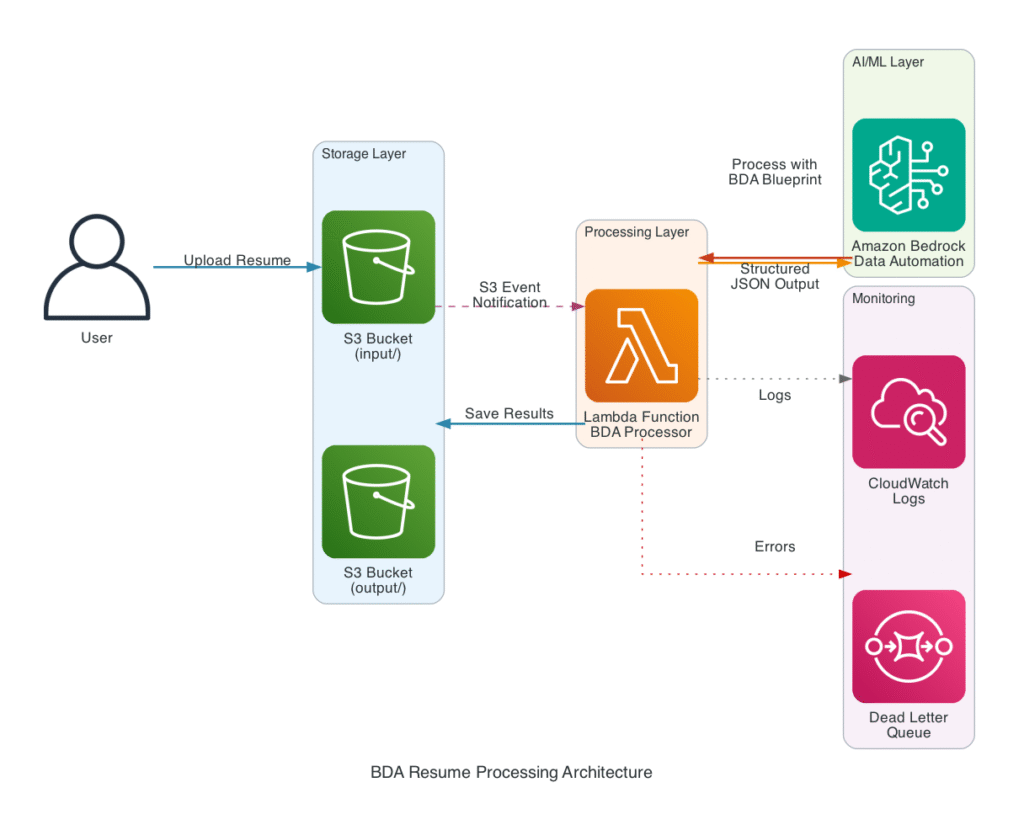

How We’ll Solve This

Our approach is straightforward: build an event-driven system that processes documents automatically. Here’s how it works:

What each piece does:

- Amazon S3: Stores uploaded resumes and processed results

- S3 Event Notifications: Triggers processing when new files arrive

- AWS Lambda: Coordinates the processing workflow

- Amazon Bedrock Data Automation: Reads the document and extracts structured data based on BDA Blueprint for Custom outputs defined here

lambda/bda_parser/blueprint_schema.json - Structured Output: Clean JSON data ready for your applications

This architecture handles the scaling automatically. Whether you process 1 resume or 1,000, the system adapts without any manual intervention.

What You’ll Need

Before we start building, make sure you have:

- AWS Account with appropriate permissions

- Amazon Bedrock access (you may need to request access)

- AWS CLI configured with your credentials

- AWS CDK installed (

npm install -g aws-cdk) - Python 3.11+ for Lambda functions

- Basic familiarity with AWS services

Project Structure:

resume-parser/

├── infrastructure/ # CDK infrastructure code

├── lambda/ # Lambda function code

├── blueprints/ # Bedrock Data Automation blueprint definitions

└── data/ # Sample resumes for testingThe Core Processing Logic

The main work happens in our Lambda function, where we connect to Bedrock Data Automation and process the documents. Let’s look at the key pieces.

Setting Up the Bedrock Data Automation Client

# File: lambda/bda_parser/bda_client.py

import boto3

def __init__(self, region: str = 'us-east-1'):

self.bda_client = boto3.client('bedrock-data-automation-runtime', region_name=region)About the API:

The bedrock-data-automation-runtime client handles document processing. Unlike control plane APIs that manage resources, this runtime client focuses on processing workloads and integrates directly with S3.

Starting Document Processing

# File: lambda/bda_parser/bda_client.py

response = self.bda_client.invoke_data_automation_async(

blueprints=[{

'blueprintArn': blueprint_arn,

'stage': 'LIVE'

}],

inputConfiguration={

's3Uri': document_uri

},

outputConfiguration={

's3Uri': f"s3://{output_bucket}/output/"

},

dataAutomationProfileArn=profile_arn

)How this works:

The invoke_data_automation_async API processes documents asynchronously. Key parameters:

- blueprints: Defines what data to extract (up to 40 blueprints supported)

- inputConfiguration: Points to your document in S3

- outputConfiguration: Where to save the results

- dataAutomationProfileArn: Required for authentication and billing

The API returns an invocationArn that you use to check processing status.

Checking Processing Status

# File: lambda/bda_parser/bda_client.py

response = self.bda_client.get_data_automation_status(

invocationArn=invocation_arn

)

if response['status'] == 'COMPLETED':

return self._fetch_results_from_s3(response['outputS3Uri'])Status checking:

The get_data_automation_status API tells you how processing is going. Status values:

- IN_PROGRESS: Document is being processed

- COMPLETED: Processing finished, results are in S3

- FAILED: Something went wrong (check

errorMessagefield)

BDA handles model selection and prompt optimization automatically, you just need to poll for completion.

Processing the Results

# File: lambda/bda_parser/result_parser.py

def parse_structured_output(self, s3_result_uri: str) -> Dict[str, Any]:

# Bedrock Data Automation outputs JSON based on your blueprint schema

raw_data = self._download_from_s3(s3_result_uri)

return {

'personal_info': raw_data.get('personal_info', {}),

'educational_info': raw_data.get('educational_info', {}),

'experience': raw_data.get('experience', {}),

'skills': raw_data.get('skills', {})

}What you get back:

BDA returns results in the structure you defined in your blueprint. The service handles:

- Schema validation: Output matches your defined structure

- Data type conversion: Text gets converted to appropriate types

- Confidence scoring: Shows how confident the AI is about each field

- Multi-format support: Works with PDFs, images, and various document formats

Lambda Handler: Connecting the Pieces

# File: lambda/handler.py

def lambda_handler(event, context):

for record in event['Records']:

bucket = record['s3']['bucket']['name']

key = record['s3']['object']['key']

# Process with BDA

structured_data = parser.process_resume(bucket, key, blueprint_arn)

# Save to output location

save_results(structured_data, output_key)How S3 events work:

Lambda receives S3 event notifications when files are uploaded. Each Records entry contains the bucket name and object key of the uploaded file. This event-driven approach means processing starts within seconds of upload.

Deploying the System

Here’s how to get everything running:

- Clone and setup the project:

git clone https://github.com/windson/bda-usecases.git

cd bda-usecases- Deploy the infrastructure:

# Deploy everything with CDK (blueprint, project, S3, Lambda, etc.)

cd infrastructure

uv sync

uv run cdk bootstrap # One-time setup

uv run cdk deploy- What gets created:

- S3 bucket for resume storage

- Lambda function with Bedrock Data Automation permissions

- BDA blueprint for resume parsing

- S3 event notifications

- IAM roles and policies

Testing the System

Once deployed, testing is straightforward:

- Upload a resume: Drop a PDF resume into the S3 bucket’s

input/folder - Automatic processing: S3 triggers Lambda, which calls BDA

- Get results: Structured JSON appears in the

output/folder

Example output:

{

"matched_blueprint": {

"arn": "arn:aws:bedrock:ap-south-1:###:blueprint/###",

"name": "resume-parser-hierarchical-###",

"confidence": 1

},

"document_class": {

"type": "Resume"

},

"split_document": {

"page_indices": [0, 1]

},

"inference_result": {

"skills": {

"technical": "Programming Languages: Python, JavaScript, Java, Go, SQL Cloud Platforms: AWS, Azure, Google Cloud Platform Frameworks: React, Django, Flask, Express.js Databases: PostgreSQL, MongoDB, Redis, DynamoDB DevOps: Docker, Kubernetes, Jenkins, Terraform, Git",

"languages": "English (Native), Spanish (Conversational)",

"certifications": "AWS Solutions Architect Associate (AWS-SAA-123456), Certified Kubernetes Administrator (CKA-789012)",

"tools": "Python, AWS, Docker, Kubernetes, PostgreSQL, JavaScript, React, Django, Flask, Express.js, JavaScript, React, Node.js, MongoDB, PostgreSQL, Redis, DynamoDB, Jenkins, Terraform, Git",

"soft": "Leadership, Team Collaboration, Problem Solving, Communication, Project Management"

},

"personal_info": {

"full_name": "John Smith",

"address": "123 Main Street, Seattle, WA 98101",

"phone": "(555) 123-4567",

"linkedin": "linkedin.com/in/johnsmith",

"email": "[email protected]"

},

"educational_info": {

"institution": "University of Washington, Seattle, WA",

"graduation_year": "June 2020",

"degree": "Bachelor of Science",

"gpa": "3.8",

"field_of_study": "Computer Science"

},

"experience": {

"key_achievements": "Lead development of cloud-native applications using AWS services, Reduced system latency by 40% through performance optimization, Led team of 5 engineers on microservices migration project, Implemented CI/CD pipeline reducing deployment time by 60%, Developed full-stack web applications for e-commerce platform, Built payment processing system handling $1M+ in monthly transactions, Improved application performance by 50% through code optimization",

"current_position": "Senior Software Engineer",

"current_company": "Tech Corp",

"years_total": "4+",

"previous_roles": "Software Engineer, StartupXYZ, July 2020 - December 2021"

}

}

}Amazon Bedrock Data Automation Best Practices: Real-World Implementation Guide

Implement Stage-Based Development Workflows for Production Readiness

The most critical best practice for Amazon Bedrock Data Automation is establishing a robust development-to-production pipeline using BDA’s stage management capabilities. Organizations should always begin with EVELOPMENT stage blueprints and projects for initial testing and validation before promoting to LIVE stage for production workloads. This approach, exemplified in real-world resume parsing systems, ensures that custom extraction schemas are thoroughly tested with sample documents before processing critical business data. The stage-based workflow prevents costly errors and allows teams to iterate on blueprint configurations safely, particularly when dealing with complex hierarchical data structures that require precise field extraction from unstructured documents, images, or audio files.

Design Hierarchical Blueprint Schemas for Scalable Data Extraction

When implementing custom output configurations, structure your blueprints using hierarchical schemas that organize extracted data into logical sections rather than flat field lists. For instance, in document processing scenarios like resume parsing, organize extraction fields into meaningful categories such as personal_info, educational_info, experience, and skills sections. This hierarchical approach not only improves data organization and downstream processing but also enhances maintainability and reusability across different projects. The blueprint catalog feature allows teams to share and standardize these schemas across the organization, with BDA’s intelligent document routing automatically selecting the most appropriate blueprint from up to 40 document blueprints based on layout matching, ensuring consistent extraction quality at scale.

Leverage Event-Driven Architecture for Real-Time Processing

Integrate BDA with AWS event-driven services like S3 Event Notifications and Lambda functions to create automated, real-time document processing pipelines. This architectural pattern eliminates manual intervention and provides sub-second trigger latency for processing uploaded documents, images, or media files. Configure Lambda functions with appropriate timeout settings (up to 15 minutes) and memory allocation to handle BDA’s asynchronous processing model effectively. Implement proper error handling with Dead Letter Queues (DLQ) and SNS notifications to capture and alert on processing failures, ensuring robust production operations. This event-driven approach scales automatically to handle concurrent uploads while maintaining cost efficiency through pay-per-execution pricing models.

Optimize Security and Monitoring for Enterprise Deployment

Implement comprehensive security and monitoring practices by utilizing customer-managed KMS keys for encryption at rest, configuring appropriate IAM roles with least-privilege access for BDA operations, and establishing CloudWatch logging for all processing activities. Tag all BDA resources consistently for cost allocation and access control, enabling proper governance across development and production environments. Monitor processing metrics, error rates, and blueprint performance through CloudWatch dashboards, and establish alerting thresholds for failed extractions or timeout scenarios. Regular blueprint performance reviews and optimization based on extraction accuracy metrics ensure continued effectiveness as document types and business requirements evolve, making BDA a reliable foundation for enterprise-scale intelligent document processing workflows.

Cleanup

When you’re done testing, clean up resources:

cdk destroyAlternatively for full cleanup you can run the commands:

# Safe cleanup - shows what would be deleted but doesn't delete

./scripts/cleanup.sh

# Force cleanup - actually deletes all resources

./scripts/cleanup.sh trueDetailed Pricing Analysis (Estimates only)

Pricing Validation: The following analysis is based on official AWS Bedrock Data Automation pricing documentation and confirmed through AWS Pricing API data.

1. Amazon Bedrock Data Automation (Primary Cost Driver)

Service Configuration:

- Custom blueprint with hierarchical schema (4 main sections: Personal Info, Education, Experience, Skills)

- Exact field count: 20 fields (5 fields per section)

- Document processing with structured field extraction

- Development → Live promotion workflow

Pricing Structure (Confirmed from AWS Documentation):

- Documents Custom Output: $0.040 per page processed (for blueprints with ≤30 fields)

- Documents Standard Output: $0.010 per page processed

- Additional Field Surcharge: $0.0005 per field per page (only for blueprints >30 fields)

Monthly Cost Calculation (1,000 resumes, 2 pages avg):

- Pages processed: 2,000 pages

- Blueprint field count: 20 fields (under 30-field threshold)

- Base custom pricing applies: 2,000 × $0.040 = $80.00/month

- Additional field charges: $0.00 (20 fields ≤ 30 field limit)

- Total BDA Cost: $80.00/month

Blueprint Field Breakdown:

- Personal Info: 5 fields (full_name, email, phone, address, linkedin)

- Educational Info: 5 fields (institution, degree, graduation_year, gpa, field_of_study)

- Experience: 5 fields (current_position, current_company, years_total, key_achievements, previous_roles)

- Skills: 5 fields (technical, soft, languages, certifications, tools)

- Total: 20 fields ✅ (No additional field charges)

2. AWS Lambda

Configuration:

- Memory: 1024 MB (1 GB)

- Runtime: Python 3.11

- Timeout: 15 minutes

- Average execution: 30 seconds per resume

- Requests: 1,000/month

Pricing (Asia Pacific Mumbai):

- Requests: $0.0000002 per request

- Compute (x86): $0.0000166667 per GB-second (Tier 1)

Monthly Cost Calculation:

- Request charges: 1,000 × $0.0000002 = $0.0002

- Compute charges: 1,000 × 30s × 1GB × $0.0000166667 = $0.50

- Total Lambda: $0.50/month

Free Tier Benefits:

- First 12 months: 1M requests/month free

- First 12 months: 400,000 GB-seconds/month free

- Effective Lambda cost in first year: $0.00

3. Amazon S3

Storage Requirements:

- Input: 1,000 resumes × 2MB = 2GB

- Output: 1,000 JSON files × 10KB = 0.01GB

- Total storage: ~2GB/month

Pricing (Asia Pacific Mumbai):

- Standard Storage: $0.025 per GB/month (first 50TB)

Monthly Cost Calculation:

- Storage: 2GB × $0.025 = $0.05/month

Free Tier Benefits:

- First 12 months: 5GB storage free

- Effective S3 cost in first year: $0.00

4. Amazon SQS (Dead Letter Queue)

Usage: Error handling only

- Estimated: 10 messages/month (1% failure rate)

- Standard Queue: $0.0000004 per request

Monthly Cost:

- 10 requests × $0.0000004 = $0.000004 (negligible)

Free Tier:

- 1 million requests/month free permanently

- Effective SQS cost: $0.00

Total Monthly Cost Summary

| Service | Monthly Cost | Free Tier Benefit | After Free Tier |

|---|---|---|---|

| Bedrock Data Automation | $80.00 | None | $80.00 |

| AWS Lambda | $0.50 | $0.50 (first year) | $0.50 |

| Amazon S3 | $0.05 | $0.05 (first year) | $0.05 |

| Amazon SQS | $0.00 | Covered by free tier | $0.00 |

| Total (First Year) | $80.00 | $0.55 savings | $80.00 |

| Total (After First Year) | $80.55 | N/A | $80.55 |

Reference: Amazon Bedrock Pricing & AWS Pricing MCP Server

Beyond Resumes: Other Use Cases

This same approach works for many document processing challenges:

- Invoices: Extract vendor info, amounts, and line items

- Contracts: Identify key terms, dates, and parties

- Forms: Process insurance claims, loan applications, or surveys

- Medical Records: Extract patient info and treatment details (with proper compliance)

You can create different blueprints for each document type while keeping the same infrastructure.

Wrapping Up

We’ve built a system that solves a real problem: turning unstructured resume documents into structured data that applications can actually use. The system handles scaling automatically and processes documents within minutes of upload.

What we accomplished:

- Automated processing: No more manual data entry

- Consistent output: Same structure regardless of input format

- Scalable solution: Handles 1 document or 1,000 without changes

- Cost-effective: Pay only for what you process

The key insight here is that document processing doesn’t have to be complex. With the right tools and architecture, you can solve these problems with relatively simple code that focuses on your business logic rather than AI model management.

This approach works for any document processing challenge where you need to extract structured data from unstructured sources. The patterns and techniques we’ve covered apply broadly across different document types and use cases.

The complete code and deployment instructions are available in our GitHub repository.

If you found this tutorial insightful, please do bookmark 🔖 it! Also please do share it with your friends and colleagues!