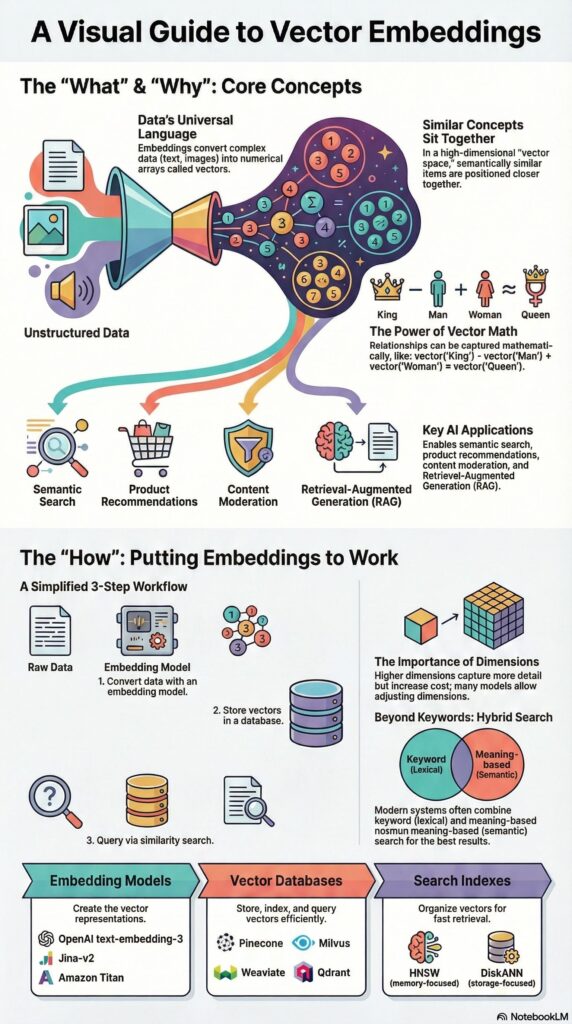

If you’re building anything exciting in the world of AI—whether it’s a smart chatbot, a recommendation engine, or the next-generation search tool—you’ve likely encountered the term Vector Embeddings. They are the secret language that transforms complex, unstructured data into intelligence that machines can truly understand.

But why do we need them, and how exactly do they work? Let’s dive into the core concepts, models, and best practices that will empower you to build faster, more accurate AI applications.

The Problem: When Keywords Fail

You see, traditional data systems hit a wall when dealing with unstructured content like documents, images, audio, or customer reviews. Databases are great at handling structured rows and columns, but they can’t grasp semantic meaning or contextual relationships. If you search a traditional system for “how do I delete my old payment info,” it only looks for those exact keywords. It might miss a perfect match titled, “Delete saved payment methods,” simply because the phrasing is different.

The fundamental challenge is this: Computers operate mathematically, but human language and media are abstract. How do you convert meaning, context, and nuance into numbers?

The Solution: Encoding Meaning with Vector Embeddings

This is where Vector Embeddings come to the rescue!

A vector embedding is a numerical representation that converts complex data (words, sentences, images) into a structured array of floating-point numbers—a high-dimensional vector. An embedding model, often built using neural networks like transformers, performs this mathematical transformation, mapping data into a high-dimensional space called a vector space.

The magic is in the proximity: When data points are converted into vectors, similar concepts or meanings are mapped closer together in the vector space, while dissimilar items are positioned farther apart. This spatial encoding allows AI algorithms to quantify relationships between pieces of content using mathematical operations like cosine similarity or Euclidean distance, enabling them to understand meaning, not just keywords.

Vector Embedding Models and Types

To create these powerful representations, you need specialized embedding models.

How Embeddings Are Created

Neural networks learn to generate vectors through representation learning, adjusting their internal weights iteratively during training to encode meaningful relationships within the data.

We can categorize embeddings based on the type of data they handle:

| Embedding Type | Purpose | Example Models/Techniques |

|---|---|---|

| Word Embeddings | Capture semantic and syntactic relations between individual words. | Word2Vec, FastText, GloVe. |

| Sentence/Document Embeddings | Capture the semantic meaning and context of entire phrases, paragraphs, or documents. | BERT and its variants (e.g., RoBERTa, DistilBERT), Sentence-BERT (SBERT), Doc2Vec, Jina Embeddings v2. |

| Image Embeddings | Convert visual information (pixels, features) into vectors for classification or search. | Convolutional Neural Networks (CNNs), ResNet, VGG, CLIP. |

| Multimodal Embeddings | Create aligned representation spaces for different data types, like text and images, enabling cross-modal search. | CLIP (Contrastive Language-Image Pretraining). |

| Specialized Embeddings | Represent complex structures or sequences. | Graph Embeddings (GraphSAGE, Node2Vec), Audio Embeddings, Product Embeddings. |

Leading commercial and open-source models available today include Amazon Titan Text Embeddings, Cohere Embed, OpenAI’s sophisticated text-embedding-3 models, and open-source powerhouses like BERT, BAAI/bge-m3, jinaai/jina-embeddings-v4, Qwen/Qwen3-Embedding-0.6B or sentence-transformers/all-MiniLM-L6-v2 to mention a few. For handling massive documents, models like Jina Embeddings v4 stand out by supporting sequences up to 32768 tokens, overcoming the common 512-token limitation of traditional BERT models.

Where Vector Embeddings Shine: Key Usecases

Vector embeddings are the foundational technology for modern AI applications.

- Retrieval-Augmented Generation (RAG): RAG is arguably the most critical use case today, enabling Large Language Models (LLMs) to access external, up-to-date knowledge bases. Embeddings allow the system to convert a user query into a vector, search a document database for semantically similar information, and then feed that relevant context back to the LLM to generate a factual, comprehensive answer, mitigating hallucinations.

- Semantic Search: This is search powered by meaning. Instead of relying on keyword matching, semantic search uses the vector distance between your query vector and indexed document vectors to retrieve results that are conceptually aligned, even if the exact words are different.

- Recommendation Systems: By converting products or content into vectors, systems can efficiently find semantically similar items, leading to highly personalized product, item, or content recommendations.

- Content Grouping and Classification: You can cluster similar documents, emails, or customer reviews by analyzing the proximity of their vector embeddings, helping to discover content categories quickly.

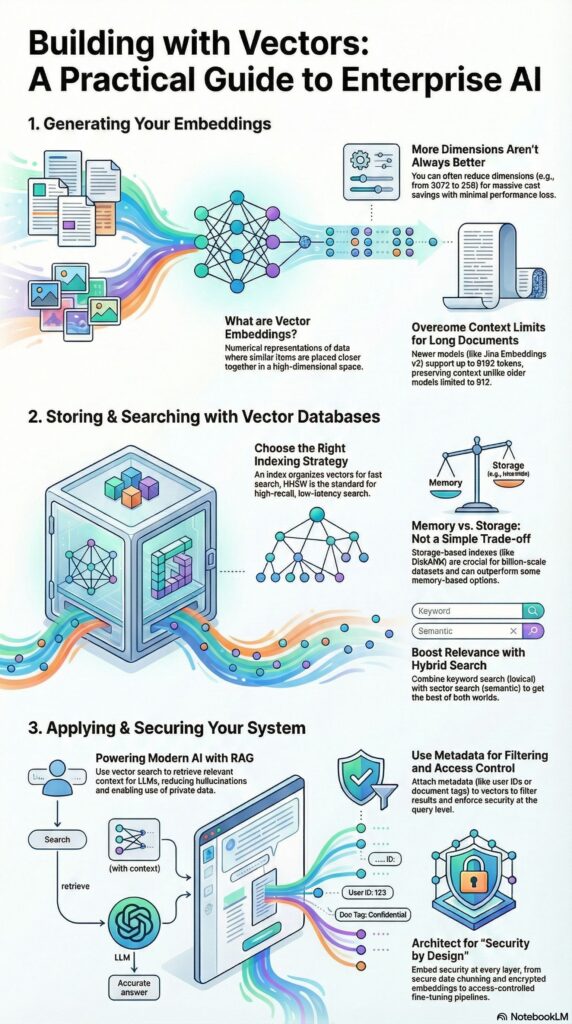

Storing and Managing Your Vectors: The Vector Database

Once you generate embeddings, you need a place optimized to store, index, and query them efficiently. Traditional databases are generally inadequate for high-dimensional vector data and similarity search operations.

Introducing the Vector Database

A Vector Database is a specialized data storage solution designed specifically to manage and enable efficient querying of vectors through similarity-based search.

Popular Vector Database Options:

- Specialized Vector Databases: Pinecone, Qdrant, Milvus, Weaviate. These systems are built AI-natively for high performance and scalability.

- Vector Search in Operational Databases: Databases like MongoDB Atlas Vector Search and relational options like PostgreSQL (using extensions such as

pgvector), OpenSearch, Redis, S3 Vectors allow you to store vectors alongside your existing transactional data.

The Importance of Indexing

Indexing is vital for performing efficient searches, especially as data scales. An index is a data structure that organizes vectors for fast Approximate Nearest Neighbor Search (ANNS), avoiding the slow process of searching every single vector (brute force).

Key Indexing Algorithms for Dense Vectors:

- HNSW (Hierarchical Navigable Small World): Widely adopted and generally excellent for large-scale similarity searches due to its multi-layered graph structure. Weaviate and Qdrant support HNSW.

- IVF (Inverted File Index): A cluster-based approach where similar features are grouped together during indexing. This dramatically reduces the search space by only checking relevant clusters.

- DiskANN: A graph-based index specifically optimized for performance when vectors and indexes must be stored on high-speed SSDs (disk/storage-based setups).

When to Use Which: Models, Dimensions, and Storage

Choosing the right approach depends heavily on your application’s constraints: accuracy, speed, cost, and scale.

1. Choosing Your Model (Proprietary vs. Open Source)

- Go with Commercial APIs (e.g., OpenAI, Amazon Titan Text Embeddings, Cohere Embed) if: You need minimal setup time, rapid prototyping, and consistently high-quality results for general tasks. The cost scales with usage.

- Go with Open Source (e.g., BERT, Sentence-Transformers) if: You have strict budget constraints, require customization, need to fine-tune on domain-specific jargon (e.g., medical or legal text), or need complete control over hosting and data privacy.

2. Selecting the Right Dimensions and Embedding Types

- Dimensionality (Size of the vector): Higher dimensionality generally means a richer representation and higher accuracy, but it drastically increases storage cost, computational requirements, and latency.

- Best Practice: Don’t automatically use the highest available dimension. Many modern models (like OpenAI’s

text-embedding-3-large) allow you to truncate dimensions (e.g., down to 256 or 1024) while retaining competitive performance, offering a massive win for efficiency. We often see 1024 or 512 dimensions cited as a great balance point.

- Best Practice: Don’t automatically use the highest available dimension. Many modern models (like OpenAI’s

- Text Context: For complex conversational AI or legal document analysis, use contextual models like BERT for deep understanding. For fast, real-time sentiment tracking on simple data, the speed of Word2Vec might be sufficient.

3. Handling Small vs. Large Data

| Scenario | Recommendation | Rationale |

|---|---|---|

| Small Data Sets (e.g., thousands of vectors) | Use Cosine Similarity (Brute Force) or FAISS Index Flat L2. | These are straightforward to implement, very accurate (since you compare against every vector), and don’t require complex index building overhead. |

| Large Data Sets (Millions/Billions of vectors) | Use Approximate Nearest Neighbor Search (ANNS) algorithms like IVF or HNSW. | Speed is paramount. HNSW is a highly robust choice for billion-scale similarity search. |

4. Local vs. Disk Mode (The Scalability Challenge)

When your index size grows to hundreds of gigabytes—as is common with RAG systems handling millions of documents—you quickly exceed the limits of main memory (RAM).

- Memory-Based (Local/RAM): Generally fastest, but constrained by available RAM.

- Storage-Based (Disk Mode): Necessary for massive datasets. Indexes like DiskANN are optimized to retrieve vectors efficiently from high-speed NVMe SSDs. Remarkably, research shows that storage-based setups do not necessarily have worse performance than some memory-based setups, demonstrating that specialized, optimized disk indexing works well at scale.

Essential Production Best Practices

To maximize the performance and security of your Vector Database infrastructure, keep these best practices in mind:

- Leverage Metadata Filtering for Efficiency: Always include relevant metadata (like creation date, tags, or region) alongside your vector embeddings [84, 85]. Before performing an expensive semantic search, use metadata filters to quickly narrow down the set of documents to search within. This simple step vastly improves query speed and efficiency.

- Manage Latency with Scalable Architecture: For production environments with unpredictable usage spikes (like e-commerce holiday traffic), select a vector database solution designed for scalability, such as a managed serverless architecture that can automatically adjust resources based on workload.

- Enforce Security with Access Controls: In enterprise AI, data security is paramount. Use metadata fields to capture user permissions (

Document-level access control tags) and enforce authorization filtering during the retrieval process, ensuring users only retrieve information they are cleared to see. - Consider Data Compression (Quantization): To reduce storage costs and memory footprint, particularly for large-scale deployments, explore quantization techniques (like binary or product quantization). Binary quantization, for instance, can reduce storage by 32–48x while retaining acceptable accuracy for many applications.

- Ensure Query Consistency: A crucial pitfall to avoid is dimension mismatch. Always ensure the query vector you generate has the exact same dimensionality as the vectors stored in your index. Inconsistency leads to inefficient indexing and potential query failure.

Conclusion: The Foundation of Intelligent Systems and the role of Vector Embeddings

Vector embeddings are more than just a passing trend; they are the fundamental building blocks of modern AI, powering everything from conversational AI to advanced Semantic Search and RAG systems.

By transforming complex data into a structured, mathematical format, embeddings enable machines to interact with the world based on meaning and context, ushering in a new era of intelligent applications. The choice of the right model, proper Vector Database indexing, and careful management of dimensions and scalability will define the success of your next AI project. Start experimenting with these powerful tools today and unlock the true potential of your data!